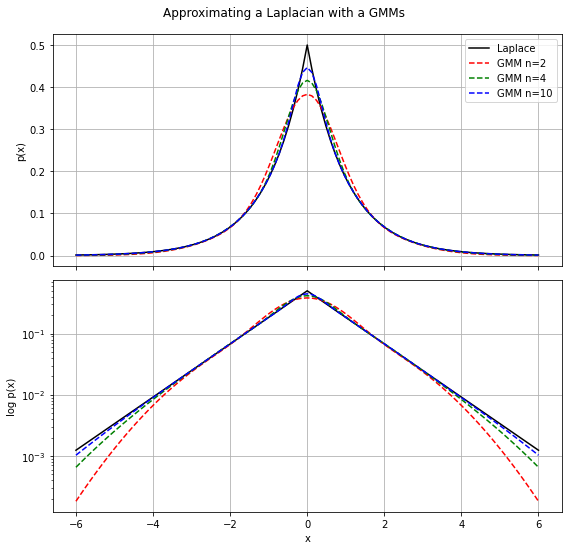

The Laplacian distribution is an interesting alternative building-block compared to the Gaussian distribution because it has much fatter tails. A drawback might be that some nice analytical properties that Gaussian distribution gives you don’t easily translate to Laplacian distributions. In those cases, it can be handy to approximate the Laplacian distribution with a mixture of Gaussians. The following approximation can then be uses

![]()

def laplacian_gmm(n=4):

# all components have the same weight

weights = np.repeat(1.0/n, n)

# centers of the n bins in the interval [0,1]

uniform = np.arange(0.5/n, 1.0, 1.0/n)

# Uniform- to Exponential-distribution transform

sigmas = np.array(-2*np.log(uniform))**.5

return weights, sigmas

def laplacian_gmm_pdf(x, n=4):

weights, sigmas = laplacian_gmm(n)

p = np.zeros_like(x)

for i in range(n):

p += weights[i] * norm(loc=0, scale=sigmas[i]).pdf(x)

return p