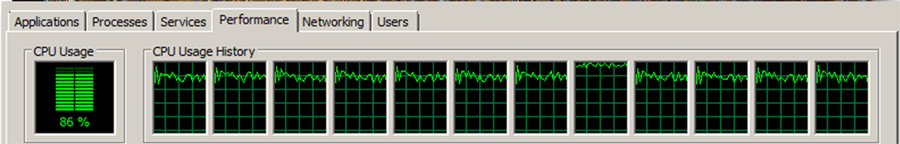

The Python code snippet below uses the multiprocessing library to processes a list of tasks in parallel using a pool of 5 threads.

note: Python also has a multithreading library called “threading”, but it is well documented that Python multithreading doesn’t work for CPU-bound tasks due to Python’s Global Interpreter Lock (GIL), for more info google: “python multithreading gil”.

from multiprocessing import Process, Pool

import itertools

import time

def train(opt, delay=2.0):

time.sleep(delay)

return f'Done training {opt}'

# Grid search

grid = {

'batch_size': [32, 64, 128],

'learning_rate': [1E-4, 1E-3, 1E-2]

}

def main():

settings_list = []

for values in itertools.product(*grid.values()):

settings_list.append( dict(zip(grid.keys(), values)) )

with Pool(5) as p:

print(p.map(train, settings_list))

if __name__ == "__main__":

main()

Output

[

"Done training {'batch_size': 32, 'learning_rate': 0.0001}",

"Done training {'batch_size': 32, 'learning_rate': 0.001}",

"Done training {'batch_size': 32, 'learning_rate': 0.01}",

"Done training {'batch_size': 64, 'learning_rate': 0.0001}",

"Done training {'batch_size': 64, 'learning_rate': 0.001}",

"Done training {'batch_size': 64, 'learning_rate': 0.01}",

"Done training {'batch_size': 128, 'learning_rate': 0.0001}",

"Done training {'batch_size': 128, 'learning_rate': 0.001}",

"Done training {'batch_size': 128, 'learning_rate': 0.01}"

]